SQL*Loader – complete reference

SQL*Loader

SQL*Loader is a high-speed data loading utility that loads data from external files into tables in an Oracle database. SQL*Loader accepts input data in a variety of formats, can perform filtering, and can load data into multiple Oracle database tables during the same load session. SQL*Loader is an integral feature of Oracle databases and is available in all configurations.

Commands and Parameters

SQL*Loader can be invoked in one of three ways:

sqlldrsqlldr keyword=value [keyword=value ...]sqlldr value [value ...]Valid Keywords/Parameters:

| Bad | Specifies the name of the bad file. You may include a path as part of the name. By default, the bad file takes the name of the control file, but with a .bad extension, and is written to the same directory as the control file. If you specify a different name, the default extension is still .bad. However, if you use the BAD parameter to specify a bad file name, the default directory becomes your current working directory. If you are loading data from multiple files, then this bad file name only gets associated with the first file being loaded. |

| Bindsize | Specifies the maximum size, in bytes, of the bind array. This parameter overrides any bind array size computed as a result of using the ROWS parameter. The default bind array size is 65,536 bytes, or 64K. |

| Columnarrayrows | Number of rows for direct path column array (Default 5000) |

| Control | Specifies the name, which may include the path, of the control file. The default extension is .ctl. |

| Data | Specifies the name of the file containing the data to load. You may include a path as part of the name. By default, the name of the control file is used, but with the .dat extension. If you specify a different name, the default extension is still .dat. If you are loading from multiple files, you can only specify the first file name using this parameter. Place the names of the other files in their respective INFILE clauses in the control file. |

| date_cache | Size (in entries) of date conversion cache (Default 1000) |

| direct | = {TRUE | FALSE} Determines the data path used for the load. A value of FALSE results in a conventional path load. A value of TRUE results in a direct path load. The default is FALSE. |

| Discard | Specifies the name of the discard file. You may include a path as part of the name. By default, the discard file takes the name of the control file, but it has a .dis extension. If you specify a different name, the default extension is still .dis. If you are loading data from multiple files, then this discard file name only gets associated with the first file being loaded |

| Discardmax | Sets an upper limit on the number of logical records that can be discarded before a load will terminate. The limit is actually one less than the value specified for DISCARDMAX. When the number of discarded records becomes equal to the value specified for DISCARDMAX, the load will terminate. The default is to allow an unlimited number of discards. However, since DISCARDMAX only accepts numeric values, it is not possible to explicitly specify the default behavior. |

| Errors | Specifies a limit on the number of errors to tolerate before the load is aborted. The default is to abort a load when the error count exceeds 50. There is no way to allow an unlimited number of errors. The best you can do is to specify a very high number for this parameter. |

| external_table | use external table for load; determines whether or not any data will be loaded using external tables. Valid options are : NOT_USED, GENERATE_ONLY, EXECUTE (Default NOT_ USED) |

| File | Specifies the database data file from which to allocate extents. Use this parameter when doing parallel loads, to ensure that each load session is using a different disk. If you are not doing a direct path load, this parameter will be ignored |

| Load | Specifies a limit on the number of logical records to load. The default is to load all records. Since LOAD only accepts numeric values, it is not possible to explicitly specify the default behavior. |

| Log | Specifies the name of the log file to generate for a load session. You may include a path as well. By default, the log file takes on the name of the control file, but with a .log extension, and is written to the same directory as the control file. If you specify a different name, the default extension is still .log. However, if you use the LOG parameter to specify a name for the log file, it will no longer be written automatically to the directory that contains the control file. |

| Multithreading | Use multithreading in direct path. The default is TRUE on multiple CPU systems and FALSE on single CPU system |

| Parallel | = {TRUE | FALSE} Indicates whether or not you are doing a direct path parallel load. If you are loading the same object from multiple direct path load sessions, then set this to TRUE. Otherwise, set it to FALSE. The default is FALSE. |

| Parfile | Tells SQL*Loader to read command-line parameter values from a text file. This text file is referred to as a parameter file, and contains keyword/value pairs. Usually, the keyword/value pairs are separated by line breaks. Use of the PARFILE parameter can save a lot of typing if you need to perform the same load several times, because you won’t need to retype all the command-line parameters each time. There is no default extension for parameter files. |

| Readsize | Specifies the size of the buffer used by SQL*Loader when reading data from the input file. The default value is 65,536 bytes, or 64K. The values of the READSIZE and BINDSIZE parameters should match. If you supply values for these two parameters that do not match, SQL*Loader will adjust them. |

| Resumable | Enable or disable resumable space allocation for the current session (Default FALSE) |

| resumable_name | Text string to help identify resumable statement. This parameter is ignored unless RESUMABLE = Y |

| resumable_timeout | Wait time (in seconds) for RESUMABLE (Default 7200). The time period in which an error must be fixed. This parameter is ignored unless RESUMABLE = Y |

| Rows |

|

| Silent | Suppress various header and feedback messages that SQL*Loader normally displays during a load session. ALL will suppress all the messages. Other valid options are: DISCARDS, ERRORS, FEEDBACK, HEADER, and PARTITIONS |

| Skip | Allows you to continue an interrupted load by skipping the specified number of logical records. If you are continuing a multiple table direct path load, you may need to use the CONTINUE_LOAD clause in the control file rather than the SKIP parameter on the command line. CONTINUE_LOAD allows you to specify a different number of rows to skip for each table that you are loading. |

| skip_index_maintenance | = {TRUE | FALSE} Controls whether or not index maintenance is done for a direct path load. This parameter does not apply to conventional path loads. A value of TRUE causes index maintenance to be skipped. Any index segments (partitions) that should have been updated will be marked as unusable. A value of FALSE causes indexes to be maintained as they normally would be. The default is FALSE |

| skip_unusable_indexes | = {TRUE | FALSE} Controls the manner in which a load is done when a table being loaded has indexes in an unusable state. A value of TRUE causes SQL*Loader to load data into tables even when those tables have indexes marked as unusable. The indexes will remain unusable at the end of the load. One caveat is that if a UNIQUE index is marked as unusable, the load will not be allowed to proceed.A value of FALSE causes SQL*Loader not to insert records when those records need to be recorded in an index marked as unusable. For a conventional path load, this means that any records that require an unusable index to be updated will be rejected as errors. For a direct path load, this means that the load will be aborted the first time such a record is encountered. The default is FALSE. |

| Streamsize | Size of direct path stream buffer in bytes (Default 256000) |

| User id | {username[/password] [@net_service_name]|/} Specifies the username and password to use when connecting to the database. The net_service_name parameter optionally allows you to connect to a remote database. Use a forward-slash character ( / ) to connect to a local database using operating system authentication. NOTE: On Unix systems you should generally avoid placing a password on the command line, because that password will be displayed whenever other users issue a command, such as ps -ef, that displays a list of current processes running on the system. |

To check which options are available in any release of SQL*Loader use this command:

sqlldr help=y

PLEASE NOTE:

In addition to being passed by keyword, parameters may also be passed by position. To do this, you simply list the values after the sqlldr command in the correct order. For example, the following two SQL*Loader commands yield identical results:

sqlldr system/manager profile.ctl profile.logsqlldr userid=system/manager control=profile.ctl log=profile.logYou can even mix the positional and keyword methods of passing command-line parameters. The one rule when doing this is that all positional parameters must come first. Once you start using keywords, you must continue to do so. For example:

sqlldr system/manager control=profile.ctl log=profile.logWhen you pass parameters positionally, you must not skip any. Also, be sure to get the order right. You must supply parameter values in the order.One may specify parameters by position before but not after parameters specified by keywords. For example, system/manager control=profile.ctl log=profile.log is allowed, but system/manager control=profile.ctl profile.log is not, even though the position of the parameter ‘log’ is correct.

Parameter Precedence

The term “command-line” notwithstanding, most SQL*Loader command-line parameters can actually be specified in three different places:

- On the command line

- In a parameter file, which is then referenced using the PARFILE parameter

- In the control file

Parameters on the command line, including those read in from a parameter file, will always override values specified in the control file. In the case of the bad and discard file names, though, the control file syntax allows for each distinct input file to have its own bad file and discard files. The command line syntax does not allow for this, so bad file and discard file names specified on the command line only apply to the first input file. For any other input files, you need to specify these bad and discard file names in the control file or accept the defaults.

The FILE parameter adds a bit of confusion to the rules stated in the previous paragraph. As with the bad file and discard file names, you can have multiple FILE values in the control file. However, when you specify the FILE parameter on the command line, it does override any and all FILE values specified in the control file.

Parameters read from a parameter file as a result of using the PARFILE parameter may override those specified on the command line. Whether or not that happens depends on the position of the PARFILE parameter with respect to the others. SQL*Loader processes parameters from left to right, and the last setting for a given parameter is the one that SQL*Loader uses.

The SQL*Loader Environment

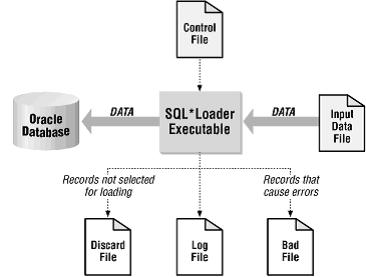

When we speak of the SQL*Loader environment, we are referring to the database, the SQL*Loader executable, and all the different files that you need to be concerned with when using SQL*Loader. These are shown in Figure 1-1.

| Figure 1-1. The SQL*Loader environment |

|

The functions of the SQL*Loader executable, the database, and the input data file are rather obvious. The SQL*Loader executable does the work of reading the input file and loading the data. The input file contains the data to be loaded, and the database receives the data.

Although Figure 1-1, doesn’t show it, SQL*Loader is capable of loading from multiple files in one session. When multiple input files are used, SQL*Loader will generate multiple bad files and discard files–one set for each input file.

File Types

SQL*Loader Control File

The control file is a text file written in a language that SQL*Loader understands. The control file tells SQL*Loader where to find the data (Input Data File), how to parse and interpret the data, and where to insert the data.

One can load data into an Oracle database by using the sqlldr (sqlload on some platforms) utility. Invoke the utility without arguments to get a list of available parameters. Look at the following example:

sqlldr username@server/password control=loader.ctl

sqlldr username/password@server control=loader.ctl

This sample control file (loader.ctl) will load an external data file containing delimited data:

load data

infile 'c:datamydata.csv'

into table emp

fields terminated by "," optionally enclosed by '"'

( empno, empname, sal, deptno )

The mydata.csv file may look like this:

10001,"Scott Tiger", 1000, 40

10002,"Frank Naude", 500, 20

Another Sample control file with in-line data formatted as fix length records. The trick is to specify “*” as the name of the data file, and use BEGINDATA to start the data section in the control file:

load data

infile *

replace

into table departments

( dept position (02:05) char(4),

deptname position (08:27) char(20)

)

begindata

COSC COMPUTER SCIENCE

ENGL ENGLISH LITERATURE

MATH MATHEMATICS

POLY POLITICAL SCIENCE

Input Data and Datafiles

SQL*Loader reads data from one or more files specified in the control file. From SQL*Loader’s perspective, the data in the datafile is organized as records. A particular datafile can be in fixed record format, variable record format, or stream record format. The record format can be specified in the control file with the INFILE parameter. If no record format is specified, the default is stream record format.

If data is specified inside the control file (that is, INFILE * was specified in the control file), then the data is interpreted in the stream record format with the default record terminator.

LOBFILEs

LOB data can be lengthy enough that it makes sense to load it from a LOBFILE. LOB data instances are still considered to be in fields, but these fields are not organized into records. Therefore, the processing overhead of dealing with records is avoided. This type of or organization of data is ideal for LOB loading.

Bulk Loads

You can use SQL*Loader to bulk load objects, collections, and LOBs. SQL*Loader supports the following bulk loads:

- Two object types: column objects and row objects

- Load data from multiple datafiles during the same load session

- Two collection types: nested tables and VARRAYS

- Four LOB types: BLOBs, CLOBs, NCLOBs, and BFILEs.

Load Methods

SQL*Loader provides three methods to load data: Conventional Path, Direct Path, and External Table.

Conventional Path Load

Conventional path load builds an array of rows to be inserted and uses the SQL INSERT statement to load the data. During conventional path loads, input records are parsed according to the field specifications, and each data field is copied to its corresponding bind array. When the bind array is full (or no more data is left to read), an array insert is executed.

Direct Path Load

A direct path load builds blocks of data in memory and saves these blocks directly into the extents allocated for the table being loaded. A direct path load uses the field specifications to build whole Oracle blocks of data, and write the blocks directly to Oracle datafiles, bypassing much of the data processing that normally takes place. Direct path load is much faster than conventional load, but entails some restrictions.

A parallel direct path load allows multiple direct path load sessions to concurrently load the same data segments. Parallel direct path is more restrictive than direct path.

External Table Load

An external table load creates an external table for data in a datafile and executes INSERT statements to insert the data from the datafile into the target table.

There are two advantages of using external table loads over conventional path and direct path loads:

- An external table load attempts to load datafiles in parallel. If a datafile is big enough, it will attempt to load that file in parallel.

- An external table load allows modification of the data being loaded by using SQL functions and PL/SQL functions as part of the INSERT statement that is used to create the external table.

SQL*Loader is flexible and offers many options that should be considered to maximize the speed of data loads. These include:

- Use Direct Path Loads – The conventional path loader essentially loads the data by using standard insert statements. The direct path loader (direct=true) loads directly into the Oracle data files and creates blocks in Oracle database block format. The fact that SQL is not being issued makes the entire process much less taxing on the database. There are certain cases, however, in which direct path loads cannot be used (clustered tables). To prepare the database for direct path loads, the script $ORACLE_HOME/rdbms/admin/catldr.sql.sql must be executed.

- Disable Indexes and Constraints. For conventional data loads only, the disabling of indexes and constraints can greatly enhance the performance of SQL*Loader.

- Use a Larger Bind Array. For conventional data loads only, larger bind arrays limit the number of calls to the database and increase performance. The size of the bind array is specified using the bindsize parameter. The bind array’s size is equivalent to the number of rows it contains (rows=) times the maximum length of each row.

- Use ROWS=n to Commit Less Frequently. For conventional data loads only, the rows parameter specifies the number of rows per commit. Issuing fewer commits will enhance performance.

- Use Parallel Loads. Available with direct path data loads only, this option allows multiple SQL*Loader jobs to execute concurrently.

- $ sqlldr control=first.ctl parallel=true direct=true

- $ sqlldr control=second.ctl parallel=true direct=true

- Use Fixed Width Data. Fixed width data format saves Oracle some processing when parsing the data. The savings can be tremendous, depending on the type of data and number of rows.

- Disable Archiving During Load. While this may not be feasible in certain environments, disabling database archiving can increase performance considerably.

- Use unrecoverable. The unrecoverable option (unrecoverable load data) disables the writing of the data to the redo logs. This option is available for direct path loads only.

Using the table table_with_one_million_rows, the following benchmark tests were performed with the various SQL*Loader options. The table was truncated after each test.

| SQL*Loader Option | Elapsed Time (Seconds) | Time Reduction |

| direct=falserows=64 | 135 | – |

| direct=falsebindsize=512000 rows=10000 | 92 | 32% |

| direct=falsebindsize=512000 rows=10000 database in noarchivelog mode | 85 | 37% |

| direct=true | 47 | 65% |

| direct=trueunrecoverable | 41 | 70% |

| direct=trueunrecoverable fixed width data | 41 | 70% |

Table 1.1 – Results indicate conventional path loads take longest.

The results above indicate that conventional path loads take the longest. However, the bindsize and rows parameters can aid the performance under these loads. The test involving the conventional load didn’t come close to the performance of the direct path load with the unrecoverable option specified.

It is also worth noting that the fastest import time achieved for this table (earlier) was 67 seconds, compared to 41 for SQL*Loader direct path – a 39% reduction in execution time. This proves that SQL*Loader can load the same data faster than import.

These tests did not compensate for indexes. All database load operations will execute faster when indexes are disabled.

Possible Issues while loading the data

Recovery from Failure

There are really only two fundamental ways that you can recover from a failed load. One approach is to delete all the data that was loaded before the failure occurred, and simply start over again. Of course, you need to fix whatever caused the failure to occur before you restart the load.

The other approach is to determine how many records were loaded successfully, and to restart the load from that point forward. Regardless of which method you choose, you need to think things through before you start a load.

Deleting data and restarting a load from scratch really doesn’t require any special functionality on the part of SQL*Loader. The important thing is that you have a reliable way to identify the data that needs to be deleted. SQL*Loader does, however, provide support for continuing an interrupted load from the point where a failure occurred. Using the SKIP command-line parameter or the SKIP clause in the control file, you can tell SQL*Loader to skip over records that were already processed in order to have the load pick up from where it left off previously.

Transaction Size

Transaction size is an issue related somewhat to performance, and somewhat to recovery from failure. In a conventional load, SQL*Loader allows you to specify the number of rows that will be loaded between commits. The number of rows that you specify has a direct impact on the size of the bind array that SQL*Loader uses, and consequently on the amount of memory required for the load. The bind array is an area in memory where SQL*Loader stores data for rows to be inserted into the database. When the bind array fills, SQL*Loader inserts the data into the table being loaded, and then executes a COMMIT.

The larger the transaction size, the more data you’ll need to reprocess if you have to restart the load after a failure. However, that’s usually not a significant issue unless your bind array size is quite large. Transaction size can also affect performance. Generally, the more data loaded in one chunk the better. So a larger bind array size typically will lead to better performance. However, it will also lead to fewer commits, resulting in the use of more rollback segment space.

Data Validation

Data validation is always a concern when loading data. SQL*Loader doesn’t provide a lot of support in this area, but there are some features at your disposal that can help you ensure that only good data is loaded into your database.

The one thing that SQL*Loader does do for you is ensure that the data being loaded into a column is valid given the column’s datatype. Text data will not be loaded into NUMBER fields, and numbers will not be loaded into DATE fields. This much, at least, you can count on. Records containing data that doesn’t convert to the destination datatype are rejected and written to the bad file.

SQL*Loader allows you to selectively load data. Using the WHEN clause in your SQL*Loader control file, you can specify conditions under which a record will be accepted. Records not meeting those conditions are not loaded, and are instead written to the discard file.

Finally, you can take advantage of the referential integrity features built into your database. SQL*Loader won’t be able to load data that violates any type of primary key, unique key, foreign key, or check constraint.

TIP: You don’t always have to rely on SQL*Loader’s features for data validation. It’s entirely feasible to load data into a staging table, run one or more external programs to weed out any rows that are invalid, and then transfer that data to a production table.

Data Transformation

Wouldn’t it be great if the data we loaded was always in a convenient format? Unfortunately, it frequently is not. In the real world, you may deal with data from a variety of sources and systems, and the format of that data may not match the format that you are using in your database. Dates, for example, are represented using a wide variety of formats. The date 1/2/2000 means one thing in the United States and quite another in Europe.

For dates and numbers, you can often use Oracle’s built-in TO_DATE and TO_NUMBER functions to convert a character-based representation to a value that can be loaded into a database DATE or NUMBER column. In fact, for date fields, you can embed the date format into your control file as part of the field definition.

SQL*Loader allows you access to Oracle’s entire library of built-in SQL functions. You aren’t limited to just TO_DATE, TO_NUMBER, and TO_CHAR. Not only can you access all the built-in SQL functions, you can also write PL/SQL code to manipulate the data being loaded.

Checklist before starting Sql*Loader

Check Environment Variables

The first and most important thing to take into a account is setting the environment variables correctly before starting Sql*Loader. Check the value of the environment variables at the machine from where you are starting your Sql*Loader session.

How to check your environment variables (e.g. NLS_LANG):

– UNIX

$ env | grep NLS_LANG

– Windows

Start –> Run –> regedit –> HKEY_LOCAL_MACHINESOFTWAREORACLE<HOMEx>NLS_LANG

1) Check NLS_LANG setting

Set NLS_LANG to the desired territory and characterset to prevent wrong data to be loaded or getting errors due to this data.

Syntax: NLS_LANG=<Language_Territory.Characterset>

Example: NLS_LANG=”Dutch_The_Netherlands.WE8ISO8859P15″

Possible problems due to an incorrect value:

– ORA-1722 invalid number

Reason:

NLS_NUMERIC_CHARACTERS is equal to ‘,.’ or ‘.,’ based upon the Territory (NLS_LANG) you are in.

Example:

The NLS_LANG setting above results in ‘,.’ For NLS_NUMERIC_CHARACTERS.

The number 13.4 is not valid in this case and produces ORA-1722.

– Characters not loaded correctly due to an incorrect characterset specified.

Reason:

The characterset specified needs to be the characterset of the data to be loaded (unless the CHARACTERSET keyword is used).

2) Check ORACLE_HOME:

Set above environment variables for the desired ORACLE_HOME from where you want SQL*Loader to be started.

Example: ORACLE_HOME=/u01/app/oracle/product/9.0.1

In Windows you can set your primary ORACLE_HOME using the Home Selector:

Start –> Programs –> Oracle Installation Products –> Home Selector

Possible problem due to an incorrect value:

– ORA-12560: TNS: protocol adapter error

Reason:

The SQL*Net connection fails because of the mixed environments

3) Check LD_LIBRARY_PATH (Unix only):

Check whether $ORACLE_HOME/lib is included in LD_LIBRARY_PATH.

Example: LD_LIBRARY_PATH=$ORACLE_HOME/lib:$LD_LIBRARY_PATH

Possible problem:

– libwtc8 library cannot be found.

4) Check ORA_NLS33 (or ORA_NLS32) setting

Example: ORA_NLS33=$ORACLE_HOME/ocommon/nls/admin/data

Possible problem due to an incorrect value:

– Segmentation Fault; Core Dump

Reason:

ORA_NLS33 points to the $ORACLE_HOME of another installation.

For example, the ORACLE_HOME where your Developer software is installed.

Limits / Defaults

1) Check the field lengths of the data to be loaded

Specify a length for the fields defined in the controlfile based upon the data to be loaded. Also check if the data to be loaded that it fits in the table columns specified.

A variable length field defaults to 255 bytes for a CHAR. If no datatype is specified, it defaults to a CHAR of 255 bytes as well.

See the ‘Utilities Manual’ chapter ‘SQL*Loader Control File Reference’ (see references) part ‘Calculating the Size of Field Buffers’ or related.

Possible errors are:

2) Check the datafile File Size Limit on your Operating System (Unix only)

On Unix, the filesize is limited by the shell’s filesize limit.

Set the limit of your filesize with ulimit (ksh and sh) or limit

(csh) command to a value larger than the size of your sqlloader datafile.

Editor’s note: Much of the content has been extracted from Oreilly and Oracle documents. Educational purpose only.

Hi, good post. I have been wondering about this issue,so thanks for posting.